In 2013, IBM and University of Texas Anderson Cancer Center developed an AI based Oncology Expert Advisor. According to IBM Watson, it analyzes patients medical records, summarizes and extracts information from vast medical literature, research to provide an assistive solution to Oncologists, thereby helping them make better decisions. The project cost more than $62 million. According to an article on The Verge, the product demonstrated a series of poor recommendations. Like recommending a drug to a lady suffering from bleeding that would increase the bleeding.

“A parrot with an internet connection” - were the words used to describe a modern AI based chat bot built by engineers at Microsoft in March 2016. ‘Tay’, a conversational twitter bot was designed to have ‘playful’ conversations with users. It was supposed to learn from the conversations. It took literally 24 hours for twitter users to corrupt it. From saying “humans are super cool” to “Hitler was right I hate jews”. Naturally, Microsoft had to take the bot down.

Amazon went for a moonshot where it literally wanted an AI to digest 100s of Resumes, spit out top 5 and then those candidates would be hired, according to an article published by The Guardian. It was trained on thousands of Resumes received by the firm over a course of 10 years. When used, it was found that the AI penalized the Resumes including terms like ‘woman’, creating a bias against female candidates. This is because the tech industry is dominated by men. Hence the data used for training clearly reflected this fact. Eventually, the project was stopped by Amazon.

The above were a few handpicked extreme cases. Not all Machine Learning failures are that blunderous. But they can lead to losses. Advanced Machine Learning models today are largely black box algorithms which means it is hard to interpret the algorithm’s decision making process. Moreover, these algorithms are as good as the data they are fed.

The question arises - How do you monitor if your model will actually work once trained??

Why is tracking a model’s performance difficult?

The model training process follows a rather standard framework. Basic steps include -

- Collect a large number of data points and their corresponding labels.

- Split them into training, validation and test sets.

- Train the model on the training set and select one among a variety of experiments tried.

- Measure the accuracy on the validation and test set (or some other metric).

- If the metric is good enough, we should expect similar results after the model is deployed into production.

So what’s the problem with this approach?

For starters, production data distribution can be very different from the training or the validation data. For example, if you have a new app to detect sentiment from user comments, but you don’t have any app generated data yet. Your best bet could be to train a model on an open data set, make sure the model works well on it and use it in your app. There’s a good chance the model might not perform well, because the data it was trained on might not necessarily represent the data users on your app generate.

Another problem is that the ground truth labels for live data aren't always available immediately. In production, models make predictions for a large number of requests, getting ground truth labels for each request is just not feasible. A simple approach is to randomly sample from requests and check manually if the predictions match the labels.

But even this is not possible in many cases. Consider the credit fraud prediction case. If you have a model that predicts if a credit card transaction is fraudulent or not. For millions of live transactions, it would take days or weeks to find the ground truth label. This is unlike an image classification problem where a human can identify the ground truth in a split second.

So does this mean you’ll always be blind to your model’s performance?

How to track a model's performance?

In the earlier section, we discussed how this question cannot be answered directly and simply. But it’s possible to get a sense of what’s right or fishy about the model. Let’s look at a few ways.

Offline methods

Even before you deploy your model, you can play with your training data to get an idea of how worse it will perform over time. Josh Will in his talk states, "If I train a model using this set of features on data from six months ago, and I apply it to data that I generated today, how much worse is the model than the one that I created untrained off of data from a month ago and applied to today?". This will give a sense of how change in data worsens your model predictions.

This is particularly useful in time-series problems. For example, if you have to predict next quarter’s earnings using a Machine Learning algorithm, you cannot tell if your model has performed good or bad until the next quarter is over. Instead, you can take your model trained to predict next quarter’s data and test it on previous quarter’s data. This obviously won’t give you the best estimate because the model wasn’t trained on previous quarter’s data. But it can give you a sense if the model’s gonna go bizarre in a live environment.

Examining distributions

Agreed, you don’t have labels. It is not possible to examine each example individually. But you can get a sense if something is wrong by looking at distributions of features of thousands of predictions made by the model.

Consider an example of a voice assistant. You created a speech recognition algorithm on a data set you outsourced specially for this project. You used the best algorithm and got a validation accuracy of 97% When everyone in your team including you was happy about the results, you decided to deploy it into production. All of a sudden there are thousands of complaints that the bot doesn’t work. You decide to dive into the issue. It turns out that construction workers decided to use your product on site and their input had a lot of background noise you never saw in your training data. You didn’t consider this possibility and your training data had clear speech samples with no noise. The features generated for the train and live examples had different sources and distribution.

You can also examine the distribution of the predicted variable. If you are dealing with a fraud detection problem, most likely your training set is highly imbalanced (99% transactions are legal and 1% are fraud). But if your predictions show that 10% of transactions are fraudulent, that’s an alarming situation.

Testing your assumptions (correlations)

It is a common step to analyze correlation between two features and between each feature and the target variable. These numbers are used for feature selection and feature engineering. However, while deploying to productions, there’s a fair chance that these assumptions might get violated. Again, due to a drift in the incoming input data stream. Hence, monitoring these assumptions can provide a crucial signal as to how well our model might be performing.

Case Study

We discussed a few general approaches to model evaluation. They work well for standard classification and regression tasks. In this section we look at specific use cases - how evaluation works for a chat bot and a recommendation engine

Chat Bot

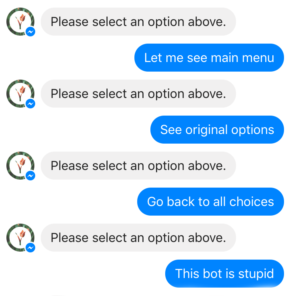

Modern chat bots are used for goal oriented tasks like knowing the status of your flight, ordering something on an e-commerce platform, automating large parts of customer care call centers. Advanced NLP and Machine Learning have improved the chat bot experience by infusing Natural Language Understanding and multilingual capabilities. Unlike a standard classification system, chat bots can’t be simply measured using one number or metric. An ideal chat bot should walk the user through to the end goal - selling something, solving their problem, etc. Below we discuss a few metrics of varying levels and granularity.

Completed Conversations

This is perhaps one of the most important high level metrics. Usually a conversation starts with a “hi” or a “hello” and ends with a feedback answer to a question like “Are you satisfied with the experience?” or “Did you get your issue solved?”.

Number of exchanges

Quite often the user gets irritated with the chat experience or just doesn't complete the conversation. In such cases, a useful piece of information is counting how many exchanges between the bot and the user happened before the user left.

Reply level feedback

Modern Natural Language Based bots try to understand the semantics of a user's messages. Users may not use the exact words the bot expects him/her to. Nevertheless, an advanced bot should try to check if the user means something similar to what is expected. Chatbots frequently ask for feedback on each reply sent by it. For example - “Is this the answer you were expecting. Please enter yes or no”. This way you can view logs and check where the bot perform poorly. This way you can also gather training data for semantic similarity machine learning.

Recommendation Engine

No successful e-commerce company survives without knowing their customers on a personal level and offering their services without leveraging this knowledge. Recommendation engines are one such tool to make sense of this knowledge. Let’s take the example of Netflix. Netflix - the internet television, awarded $1 million to a company called BellKor’s Pragmatic Chaos who built a recommendation algorithm which was ~10% better than the existing one used by Netflix in a competition organized called Netflix Prize.

According to Netflix , a typical user on its site loses interest in 60-90 seconds, after reviewing 10-12 titles, perhaps 3 in detail. It proposes the recommendation problem as each user, on each screen finds something interesting to watch and understands why it might be interesting. For Netflix, maintaining a low retention rate is extremely important because the cost of acquiring new customers is high to maintain the numbers. According to them, the recommendation system saves them $1 billion annually.

Since they invest so much in their recommendations, how do they even measure its performance in production?

Take-Rate

One obvious thing to observe is how many people watch things Netflix recommends. This is called take-rate. It is defined as the fraction of recommendations offered that result in a play.

Effective Catalog Size (ECS)

This is another metric designed to fine tune the successful recommendations. Netflix provides recommendation on 2 main levels. First - Top recommendations from overall catalog. Second - Recommendations that are specific to a genre.For a particular genre, if there are N recommendations,ECS measures how spread the viewing is across the items in the catalog. If the majority viewing comes from a single video, then the ECS is close to 1. If the viewing is uniform across all the videos, then the ECS is close to N.

Machine Learning in production is not static - Changes with environment

Lets say you are an ML Engineer in a social media company. In the last couple of weeks, imagine the amount of content being posted on your website that just talks about Covid-19. Almost every user who usually talks about AI or Biology or just randomly rants on the website is now talking about Covid-19. And you know this is a spike. The trend isn’t gonna last. But for now, your data distribution has changed considerably.

What should you expect from this? As an ML person, what should be your next step? Do you expect your Machine Learning model to work perfectly?

Model Drift

As discussed above, your model is now being used on data whose distribution it is unfamiliar with. Let’s continue with the example of Covid-19. Not only the amount of content on that topic increases, but the number of product searches relating to masks and sanitizers increases too. There can be many possible trends or outliers one can expect. Your Machine Learning model, if trained on static data, cannot account for these changes. It suffers from something called model drift or co-variate shift.

The tests used to track models performance can naturally, help in detecting model drift. One can set up change-detection tests to detect drift as a change in statistics of the data generating process. It is possible to reduce the drift by providing some contextual information, like in the case of Covid-19, some information that indicates that the text or the tweet belongs to a topic that has been trending recently. This way the model can condition the prediction on such specific information. Although drift won’t be eliminated completely.

Solution - Retraining your model

Questions to ask before retraining

So far we have established the idea of model drift. How do we solve it? We can retrain our model on the new data. So should we call model.fit() again and call it a day?

- How much data to take for retraining?

How much new data should we take for retraining? Should we use the older data? If yes, what should be a good mix? Domain knowledge and experience can help here. If you know that the data distribution changes frequently, you can take a larger proportion of the new data. Similarly, if you don’t have that many new examples but your model performance worsens, you can take all the new data and a sizeable chunk of old data to retrain. - How frequent should you retrain?

How frequent should our retraining jobs run? If you receive new data periodically, you might want to schedule retraining jobs accordingly. For example, if you are predicting which applicant would get admitted in a school based on his personal information, it makes no sense to run training jobs everyday. Because you get new data every semester/year. - Should you retrain the entire model?

Should we train the entire model with the new data? If your model is huge, training the entire model is expensive and time consuming. Maybe you should just train a few layers and freeze the rest of the network. For instance, XLNet, a very large deep learning model used for NLP tasks, cost $245000 to train. Yes, that amount of money to train a Machine Learning model. Most likely you won’t use that amount of computation power, but training model on cloud GPUs/TPUs can be very expensive. If the model has a size in GBs, storing it can also bear significant cost. Freezing a portion of the model is possible in deep learning. It is hard to implement in traditional machine learning algorithms. But anyway training them isn’t computationally very expensive compared to deep learning models. - Should you directly deploy after retraining?

Should we trust our retrained model blindly and deploy it as soon as it is trained? There’s still a risk of the new model performing poorly than the older model even after retraining on new data. It is often a good practice to let the old model serve the requests for some time after building the retrained model. The retrained model can generate shadow predictions. Meaning, the predictions won’t be used directly, but will be logged to check if the new model is sane. Once satisfied, the older model can be replaced with the newer model. It is possible to automate retraining deployment, but not advisable if you aren’t sure. Best way is to initially do manual deployments until you are sure.

There are many more questions one can ask depending on the application and the business.

Setting up infrastructure for model retraining

As with most industry use cases of Machine Learning, the Machine Learning code is rarely the major part of the system. There are greater concerns and effort with the surrounding infrastructure code. Before we get into an example, let’s look at a few useful tools -

Containers

Containers are isolated applications. You can contain an application code, their dependencies easily and build the same application consistently across systems. They run in isolated environments and do not interfere with the rest of the system. They are more resource efficient than virtual machines.

Kubernetes

It is a tool to manage containers. It helps scale and manage containerized applications.

In our case, if we wish to automate the model retraining process, we need to set up a training job on Kubernetes. A Kubernetes job is a controller that makes sure pods complete their work. Pods are the smallest deployable unit in Kubernetes. Instead of running containers directly, Kubernetes runs pods, which contain single or multiple containers. The training job would finish the training and store the model somewhere on the cloud. We can make another inference job that picks up the stored model to make inferences.

The above system would be a pretty basic one. There is a potential for a lot more infrastructural development depending on the strategy. Let’s say you want to use a champion-challenger test to select the best model. You’d have a champion model currently in production and you’d have, say, 3 challenger models. All four of them are being evaluated. You decide how many requests would be distributed to each model randomly. Depending on the performance and statistical tests, you make a decision if one of the challenger models performs significantly better than the champion model. Very similar to A/B testing. In the above testing strategy, there would be additional infrastructure required - like setting up processes to distribute requests and logging results for every model, deciding which one is the best and deploying it automatically.

Online Learning

Generally, Machine Learning models are trained offline in batches (on the new data) in the best possible ways by Data Scientists and are then deployed in production. In case of any drift of poor performance, models are retrained and updated. Even the model retraining pipeline can be automated. But what if the model was continuously learning? As in, it updates parameters from every single time it is being used. Close to ‘learning on the fly’. This helps you to learn variations in distribution as quickly as possible and reduce the drift in many cases.

For example, you build a model that takes news updates, weather reports, social media data to predict the amount of rainfall in a region. At the end of the day, you have the true measure of rainfall that region experienced. Your model then uses this particular day’s data to make an incremental improvement in the next predictions.

Online learning methods are found to be relatively faster than their batch equivalent methods. One thing that’s not obvious about online learning is its maintenance - If there are any unexpected changes in the upstream data processing pipelines, then it is hard to manage the impact on the online algorithm. Previously, the data would get dumped in a storage on cloud and then the training happened offline, not affecting the current deployed model until the new one is ready. Now the upstream pipelines are more coupled with the model predictions. In addition, it is hard to pick a test set as we have no previous assumptions about the distribution. If we pick a test set to evaluate, we would assume that the test set is representative of the data we are operating on.

Conclusion

In this post, we saw how poor Machine Learning can cost a company money and reputation, why it is hard to measure performance of a live model and how we can do it effectively. We also looked at different evaluation strategies for specific examples like recommendation systems and chat bots. Finally, we understood how data drift makes ML dynamic and how we can solve it using retraining.

It is hard to build an ML system from scratch. Especially if you don’t have an in-house team of experienced Machine Learning, Cloud and DevOps engineers. That’s where we can help you! You can create awesome ML models for image classification, object detection, OCR (receipt and invoice automation) easily on our platform and that too with less data. Besides, deploying it is just as easy as a few lines of code. Make your free model today at nanonets.com