Introduction

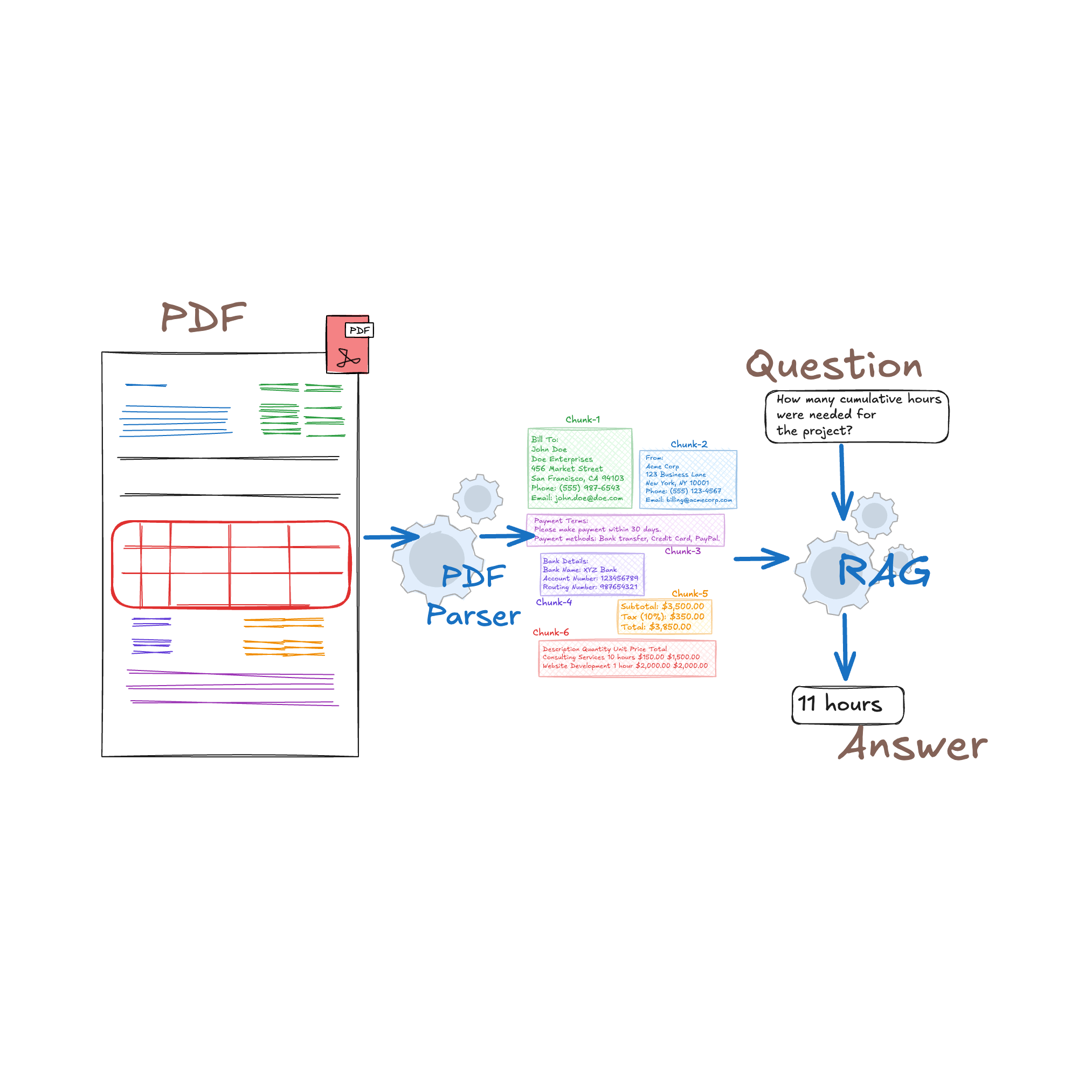

In the world of AI, where data drives decisions, choosing the right tools can make or break your project. For Retrieval-Augmented Generation systems more commonly known as RAG systems, PDFs are a goldmine of information—if you can unlock their contents. But PDFs are tricky; they’re often packed with complex layouts, embedded images, and hard-to-extract data.

If you’re not familiar with RAG systems, such systems work by enhancing an AI model’s ability to provide accurate answers by retrieving relevant information from external documents. Large Language Models (LLMs), such as GPT, use this data to deliver more informed, contextually aware responses. This makes RAG systems especially powerful for handling complex sources like PDFs, which often contain tricky-to-access but valuable content.

The right PDF parser doesn't just read files—it turns them into a wealth of actionable insights for your RAG applications. In this guide, we'll dive into the essential features of top PDF parsers, helping you find the perfect fit to power your next RAG breakthrough.

Understanding PDF Parsing for RAG

What is PDF Parsing?

PDF parsing is the process of extracting and converting the content within PDF files into a structured format that can be easily processed and analyzed by software applications. This includes text, images, and tables that are embedded within the document.

Why is PDF Parsing Crucial for RAG Applications?

RAG systems rely on high-quality, structured data to generate accurate and ctextually relevant outputs. PDFs, often used for official documents, business reports, and legal contracts, contain a wealth of information but are notorious for their complex layouts and unstructured data. Effective PDF parsing ensures that this information is accurately extracted and structured, providing the RAG system with the reliable data it needs to function optimally. Without robust PDF parsing, critical data could be misinterpreted or lost, leading to inaccurate results and undermining the effectiveness of the RAG application.

The Role of PDF Parsing in Enhancing RAG Performance

Tables are a prime example of the complexities involved in PDF parsing. Consider the S-1 document used in the registration of securities. The S-1 contains detailed financial information about a company’s business operations, use of proceeds, and management, often presented in tabular form. Accurately extracting these tables is crucial because even a minor error can lead to significant inaccuracies in financial reporting or compliance with SEC (Securities and Exchange Commission regulations), which is a U.S. government agency responsible for regulating the securities markets and protecting investors. It ensures that companies provide accurate and transparent information, particularly through documents like the S-1, which are filed when a company plans to go public or offer new securities.

A well-designed PDF parser can handle these complex tables, maintaining the structure and relationships between the data points. This precision ensures that when the RAG system retrieves and uses this information, it does so accurately, leading to more reliable outputs.

For example, we can present the following table from our financial S1 PDF to an LLM and request it to perform a specific analysis based on the data provided.

By enhancing the extraction accuracy and preserving the integrity of complex layouts, PDF parsing plays a vital role in elevating the performance of RAG systems, particularly in use cases like financial document analysis, where precision is non-negotiable.

Key Considerations When Choosing a PDF Parser for RAG

When selecting a PDF parser for use in a RAG system, it's essential to evaluate several critical factors to ensure that the parser meets your specific needs. Below are the key considerations to keep in mind:

Accuracy of Text Extraction

Accuracy is key to making sure that the data extracted from PDFs is dependable and can be easily used in RAG applications. Poor extraction can lead to misunderstandings and hurt the performance of AI models.

Ability to Maintain Document Structure

- Keeping the original structure of the document is important to make sure that the extracted data keeps its original meaning. This includes preserving the layout, order, and connections between different parts (e.g., headers, footnotes, tables).

Support for Various PDF Types

- PDFs come in various forms, including digitally created PDFs, scanned PDFs, interactive PDFs, and those with embedded media. A parser’s ability to handle different types of PDFs ensures flexibility in working with a wide range of documents.

Integration Capabilities with RAG Frameworks

- For a PDF parser to be useful in an RAG system, it needs to work well with the existing setup. This includes being able to send extracted data directly into the system for indexing, searching, and generating results.

Challenges in PDF Parsing for RAG

RAG systems rely heavily on accurate and structured data to function effectively. PDFs, however, often present significant challenges due to their complex formatting, varying content types, and inconsistent structures. Here are the primary challenges in PDF parsing for RAG:

Dealing with Complex Layouts and Formatting

PDFs often include multi-column layouts, mixed text and images, footnotes, and headers, all of which make it difficult to extract information in a linear, structured format. The non-linear nature of many PDFs can confuse parsers, leading to jumbled or incomplete data extraction.

A financial report might have tables, charts, and multiple columns of text on the same page. Take the above layout for example, extracting the relevant information while maintaining the context and order can be challenging for standard parsers.

Wrongly Extracted Data:

Handling Scanned Documents and Images

Many PDFs contain scanned images of documents rather than digital text. These documents usually do require Optical Character Recognition (OCR) to convert the images into text, but OCR can struggle with poor image quality, unusual fonts, or handwritten notes, and in most PDF Parsers the data from image extraction feature is not available.

Extracting Tables and Structured Data

Tables are a gold mine of data, however, extracting tables from PDFs is notoriously difficult due to the varying ways tables are formatted. Tables may span multiple pages, include merged cells, or have irregular structures, making it hard for parsers to correctly identify and extract the data.

An S-1 filing might include complex tables with financial data that need to be extracted accurately for analysis. Standard parsers may misinterpret rows and columns, leading to incorrect data extraction.

Before expecting your RAG system to analyze numerical data stored in critical tables, it’s essential to first evaluate how effectively this data is extracted and sent to the LLM. Ensuring accurate extraction is key to determining how reliable the model's calculations will be.

Comparative Analysis of Popular PDF Parsers for RAG

In this section of the article, we will be comparing some of the most well-known PDF parsers on the challenging aspects of PDF extraction using the AllBirds S1 forum. Keep in mind that the AllBirds S1 PDF is 700 pages, and highly complex PDF parsers that poses significant challenges, making this comparison section a real test of the five parsers mentioned below. In more common and less complex PDF documents, these PDF Parsers might offer better performance when extracting the needed data.

Multi-Column Layouts Comparison

Below is an example of a multi-column layout extracted from the AllBirds S1 form. While this format is straightforward for human readers, who can easily track the data of each column, many PDF parsers struggle with such layouts. Some parsers may incorrectly interpret the content by reading it as a single vertical column, rather than recognizing the logical flow across multiple columns. This misinterpretation can lead to errors in data extraction, making it challenging to accurately retrieve and analyze the information contained within such documents. Proper handling of multi-column formats is essential for ensuring accurate data extraction in complex PDFs.

PDF Parsers in Action

Now let's check how some PDF parsers extract multi-column layout data.

a) PyPDF1 (Multi-Column Layouts Comparison)

Nicole BrookshirePeter WernerCalise ChengKatherine DenbyCooley LLP3 Embarcadero Center, 20th FloorSan Francisco, CA 94111(415) 693-2000Daniel LiVP, LegalAllbirds, Inc.730 Montgomery StreetSan Francisco, CA 94111(628) 225-4848Stelios G. SaffosRichard A. KlineBenjamin J. CohenBrittany D. RuizLatham & Watkins LLP1271 Avenue of the AmericasNew York, New York 10020(212) 906-1200The primary issue with the PyPDF1 parser is its inability to neatly separate extracted data into distinct lines, leading to a cluttered and confusing output. Furthermore, while the parser recognizes the concept of multiple columns, it fails to properly insert spaces between them. This misalignment of text can cause significant challenges for RAG systems, making it difficult for the model to accurately interpret and process the information. This lack of clear separation and spacing ultimately hampers the effectiveness of the RAG system, as the extracted data does not accurately reflect the structure of the original document.

b) PyPDF2 (Multi-Column Layouts Comparison)

Nicole Brookshire Daniel Li Stelios G. Saffos

Peter Werner VP, Legal Richard A. Kline

Calise Cheng Allbirds, Inc. Benjamin J. Cohen

Katherine Denby 730 Montgomery Street Brittany D. Ruiz

Cooley LLP San Francisco, CA 94111 Latham & Watkins LLP

3 Embarcadero Center, 20th Floor (628) 225-4848 1271 Avenue of the Americas

San Francisco, CA 94111 New York, New York 10020

(415) 693-2000 (212) 906-1200As shown above, even though the PyPDF2 parser separates the extracted data into separate lines making it easier to understand, it still struggles with effectively handling multi-column layouts. Instead of recognizing the logical flow of text across columns, it mistakenly extracts the data as if the columns were single vertical lines. This misalignment results in jumbled text that fails to preserve the intended structure of the content, making it difficult to read or analyze the extracted information accurately. Proper document parsing tools should be able to identify and correctly process such complex layouts to maintain the integrity of the original document's structure.

c) PDFMiner (Multi-Column Layouts Comparison)

Nicole Brookshire

Peter Werner

Calise Cheng

Katherine Denby

Cooley LLP

3 Embarcadero Center, 20th Floor

San Francisco, CA 94111

(415) 693-2000

Copies to:

Daniel Li

VP, Legal

Allbirds, Inc.

730 Montgomery Street

San Francisco, CA 94111

(628) 225-4848

Stelios G. Saffos

Richard A. Kline

Benjamin J. Cohen

Brittany D. Ruiz

Latham & Watkins LLP

1271 Avenue of the Americas

New York, New York 10020

(212) 906-1200The PDFMiner parser handles the multi-column layout with precision, accurately extracting the data as intended. It correctly identifies the flow of text across columns, preserving the document’s original structure and ensuring that the extracted content remains clear and logically organized. This capability makes PDFMiner a reliable choice for parsing complex layouts, where maintaining the integrity of the original format is crucial.

d) Tika-Python (Multi-Column Layouts Comparison)

Copies to:

Nicole Brookshire

Peter Werner

Calise Cheng

Katherine Denby

Cooley LLP

3 Embarcadero Center, 20th Floor

San Francisco, CA 94111

(415) 693-2000

Daniel Li

VP, Legal

Allbirds, Inc.

730 Montgomery Street

San Francisco, CA 94111

(628) 225-4848

Stelios G. Saffos

Richard A. Kline

Benjamin J. Cohen

Brittany D. Ruiz

Latham & Watkins LLP

1271 Avenue of the Americas

New York, New York 10020

(212) 906-1200Although the Tika-Python parser doesn’t match the precision of PDFMiner in extracting data from multi-column layouts, it still demonstrates a strong ability to understand and interpret the structure of such data. While the output may not be as polished, Tika-Python effectively recognizes the multi-column format, ensuring that the overall structure of the content is preserved to a reasonable extent. This makes it a reliable option when handling complex layouts, even if some refinement might be necessary post-extraction

e) Llama Parser (Multi-Column Layouts Comparison)

Nicole Brookshire Daniel Lilc.Street1 Stelios G. Saffosen

Peter Werner VP, Legany A 9411 Richard A. Kline

Katherine DenCalise Chengby 730 Montgome C848Allbirds, Ir Benjamin J. CohizLLPcasBrittany D. Rus meri20

3 Embarcadero Center 94111Cooley LLP, 20th Floor San Francisco,-4(628) 225 1271 Avenue of the Ak 100Latham & Watkin

San Francisco, CA0(415) 693-200 New York, New Yor0(212) 906-120The Llama Parser struggled with the multi-column layout, extracting the data in a linear, vertical format rather than recognizing the logical flow across the columns. This results in disjointed and hard-to-follow data extraction, diminishing its effectiveness for documents with complex layouts.

Table Comparison

Extracting data from tables, especially when they contain financial information, is critical for ensuring that important calculations and analyses can be performed accurately. Financial data, such as balance sheets, profit and loss statements, and other quantitative information, is often structured in tables within PDFs. The ability of a PDF parser to correctly extract this data is essential for maintaining the integrity of financial reports and performing subsequent analyses. Below is a comparison of how different PDF parsers handle the extraction of such data.

Below is an example table extracted from the same Allbird S1 forum in order to test our parsers on.

Now let's check how some PDF parsers extract tabular data.

a) PyPDF1 (Table Comparison)

☐CALCULATION OF REGISTRATION FEETitle of Each Class ofSecurities To Be RegisteredProposed MaximumAggregate Offering PriceAmount ofRegistration FeeClass A common stock, $0.0001 par value per share$100,000,000$10,910(1)Estimated solely for the purpose of calculating the registration fee pursuant to Rule 457(o) under the Securities Act of 1933, as amended.(2)Similar to its handling of multi-column layout data, the PyPDF1 parser struggles with extracting data from tables. Just as it tends to misinterpret the structure of multi-column text by reading it as a single vertical line, it similarly fails to maintain the proper formatting and alignment of table data, often leading to disorganized and inaccurate outputs. This limitation makes PyPDF1 less reliable for tasks that require precise extraction of structured data, such as financial tables.

b) PyPDF2 (Table Comparison)

Similar to its handling of multi-column layout data, the PyPDF2 parser struggles with extracting data from tables. Just as it tends to misinterpret the structure of multi-column text by reading it as a single vertical line, however unlike the PyPDF1 Parser the PyPDF2 Parser splits the data into separate lines.

CALCULATION OF REGISTRATION FEE

Title of Each Class of Proposed Maximum Amount of

Securities To Be Registered Aggregate Offering Price(1)(2) Registration Fee

Class A common stock, $0.0001 par value per share $100,000,000 $10,910c) PDFMiner (Table Comparison)

Although the PDFMiner parser understands the basics of extracting data from individual cells, it still struggles with maintaining the correct order of column data. This issue becomes apparent when certain cells are misplaced, such as the “Class A common stock, $0.0001 par value per share” cell, which can end up in the wrong sequence. This misalignment compromises the accuracy of the extracted data, making it less reliable for precise analysis or reporting.

CALCULATION OF REGISTRATION FEE

Class A common stock, $0.0001 par value per share

Title of Each Class of

Securities To Be Registered

Proposed Maximum

Aggregate Offering Price

(1)(2)

$100,000,000

Amount of

Registration Fee

$10,910d) Tika-Python (Table Comparison)

As demonstrated below, the Tika-Python parser misinterprets the multi-column data into vertical extraction., making it not that much better compared to the PyPDF1 and 2 Parsers.

CALCULATION OF REGISTRATION FEE

Title of Each Class of

Securities To Be Registered

Proposed Maximum

Aggregate Offering Price

Amount of

Registration Fee

Class A common stock, $0.0001 par value per share $100,000,000 $10,910e) Llama Parser (Table Comparision)

CALCULATION OF REGISTRATION FEE

Securities To Be RegisteTitle of Each Class ofred Aggregate Offering PriceProposed Maximum(1)(2) Registration Amount ofFee

Class A common stock, $0.0001 par value per share $100,000,000 $10,910The Llama Parser faced challenges when extracting data from tables, failing to capture the structure accurately. This resulted in misaligned or incomplete data, making it difficult to interpret the table’s contents effectively.

Image Comparison

In this section, we will evaluate the performance of our PDF parsers in extracting data from images embedded within the document.

Llama Parser

Text: Table of Contents

allbids

Betler Things In A Better Way applies

nof only to our products, but to

everything we do. That'$ why we're

pioneering the first Sustainable Public

Equity OfferingThe PyPDF1, PyPDF2, PDFMiner, and Tika-Python libraries are all limited to extracting text and metadata from PDFs, but they do not possess the capability to extract data from images. On the other hand, the Llama Parser demonstrated the ability to accurately extract data from images embedded within the PDF, providing reliable and precise results for image-based content.

Summary of Quality of Extraction

Note that the below summary is based on how the PDF Parsers have handled the given challenges provided in the AllBirds S1 Form.

Best Practices for PDF Parsing in RAG Applications

Effective PDF parsing in RAG systems relies heavily on pre-processing techniques to enhance the accuracy and structure of the extracted data. By applying methods tailored to the specific challenges of scanned documents, complex layouts, or low-quality images, the parsing quality can be significantly improved.

Pre-processing Techniques to Improve Parsing Quality

Pre-processing PDFs before parsing can significantly improve the accuracy and quality of the extracted data, especially when dealing with scanned documents, complex layouts, or low-quality images.

Here are some reliable techniques:

- Text Normalization: Standardize the text before parsing by removing unwanted characters, correcting encoding issues, and normalizing font sizes and styles.

- Converting PDFs to HTML: Converting PDFs to HTML adds valuable HTML elements, such as <p>, <br>, and <table>, which inherently preserve the structure of the document, like headers, paragraphs, and tables. This helps in organizing the content more effectively compared to PDFs. For example, converting a PDF to HTML can result in structured output like:

<html><body><p>Table of Contents<br>As filed with the Securities and Exchange Commission on August 31, 2021<br>Registration No. 333-<br>UNITED STATES<br>SECURITIES AND EXCHANGE COMMISSION<br>Washington, D.C. 20549<br>FORM S-1<br>REGISTRATION STATEMENT<br>UNDER<br>THE SECURITIES ACT OF 1933<br>Allbirds, Inc.<br>- Page Selection: Extract only the relevant pages of a PDF to reduce processing time and focus on the most important sections. This can be done by manually or programmatically selecting pages that contain the required information. If you're extracting data from a 700-page PDF, selecting only the pages with balance sheets can save significant processing time.

- Image Enhancement: By using image enhancement techniques, we can improve the clarity of the text in scanned PDFs. This includes adjusting contrast, brightness, and resolution, all of which contribute to making OCR more effective. These steps help ensure that the extracted data is more accurate and reliable.

Testing Our PDF Parser Inside a RAG System

In this section, we will take our testing to the next level by integrating each of our PDF parsers into a fully functional RAG system, leveraging the Llama 3 model as the system's LLM.

We will evaluate the model's responses to specific questions and assess how the quality of the PDF parsers in extracting data impacts the accuracy of the RAG system’s replies. By doing so, we can gauge the parser's performance in handling a complex document like the S1 filing, which is long, highly detailed, and difficult to parse. Even a minor error in data extraction could significantly impair the RAG model's ability to generate accurate responses.

This method will allow us to push the parsers to their limits, testing their robustness and accuracy in handling intricate legal and financial documentation.

1) Question from Text: "What is the total net revenue for Allbirds for the year ended December 31, 2020?"

Correct Answer: $219.3 million

PyPDF

- Answer: the conversion ratio is subject to adjustment upon the occurrence of certain events, as described below.

- Explanation: Incorrect answer. Due to the unstructured data extracted by PyPDF, the RAG system retrieves incorrect information.

PyPDF2

- Answer: in our Annual Report on Form 10-K for the year ended December 31, 2020. and $5.4 million and $7.4 million for the six months ended June 30, 2020 and 2021, respectively. (2) Includes depreciation and amortization expense of ………..

- Explanation: Incorrect answer, despite the RAG system returning the correct answer, "Allbirds, Inc. Net revenue $193,673 $219,296 $92,779 $117,542 December 31," the model was unable to provide an accurate response due to the poor parsing of the table from the PDF. The messy extraction of data hindered the system’s ability to interpret the numbers and deliver a precise answer.

PDFMiner

- Answer: $219.3 million. According to the given context, Allbirds' net revenue grew from $126.0 million in 2018 to $219.3 million in 2020, representing a compound annual growth rate. Therefore, the total net revenue for Allbirds for the year ended December 31, 2020 is $219.3 million.

- Explanation: Correct answer.

Tika Python

- Answer: According to the 10-K filing of Allbirds, Inc. for the year ended December 31, 2020, the total net revenue for the year was $266.7 million. [1]

- Explanation: Incorrect answer, the retrieved data did not contain the correct response, thus the model used it pre-stored knowledge.

Llama Parser

- Answer: The total net revenue for Allbirds for the year ended December 31, 2020 is $219,296. This is stated in the given context.

- Explanation: Correct answer.

Question from Table: What is the proposed maximum aggregate offering price for Allbirds, Inc.'s Class A common stock in this registration statement?

Correct answer: $100,000,000.

PyPDF

- Answer: the conversion ratio is subject to adjustment upon the occurrence of certain events, as described below.

- Explanation: Incorrect answer. While the value "$100,000,000" was present in the retrieved data, the large volume of retrieved information due to the bad PDF Parsing overwhelmed the LLM, causing confusion and leading to an inaccurate response.

PyPDF2

- Answer: The proposed maximum aggregate offering price for Allbirds, Inc.'s Class A common stock is $100,000,000.

- Explanation: Correct answer., the correct value was returned in the retrieved data.

PDFMiner

- Answer: Answer: The proposed maximum aggregate offering price for Allbirds, Inc.'s Class A common stock in this registration statement is not specified in the context.

- Explanation: Incorrect answer, the correct answer “$100,000,000” was not returned in the retrieved data, due to bad PDF parsing.

Tika Python

- Answer: The proposed maximum aggregate offering price for Allbirds, Inc.'s Class A common stock is not specified in the given context.

- Explanation: Incorrect answer, the correct answer “$100,000,000” was not returned in the retrieved data, due to bad PDF parsing.

Llama Parser

- Answer: The actual answer is not provided in the given context.

- Explanation: Incorrect answer, the correct answer “$100,000,000” was not returned in the retrieved data, due to bad PDF parsing.

Question from Image: How many company-operated stores did Allbirds have as of June 30, 2021?

Correct answer: 100%

For this given question, we will only be testing the Llama parser since it is the only model capable of reading data in the images.

- Answer: Not mentioned in the provided context.

- Explanation: Incorrect answer, even though the RAG system failed in retrieving the actual value as the extracted data from the pdf image which was: “35', ' 27 countries', ' Company-operatedstores as 2.5B”, the extracted data was quite messy, causing the RAG system to not retrieve it.

We've asked 10 such questions pertaining to content in text/table and summarized the results below.

Summary of all results

PyPDF: Struggles with both structured and unstructured data, leading to frequent incorrect answers. Data extraction is messy, causing confusion in RAG model responses.

PyPDF2: Performs better with table data but struggles with large datasets that confuse the model. It managed to return correct answers for some structured text data.

PDFMiner: Generally correct with text-based questions but struggles with structured data like tables, often missing key information.

Tika Python: Extracts some data but relies on pre-stored knowledge if correct data isn't retrieved, leading to frequent incorrect answers for both text and table questions.

Llama Parser: Best at handling structured text, but struggles with complex image data and messy table extractions.

From all these experiments it's fair to say that PDF parsers are yet to catch up for complex layouts and might give a tough time for downstream applications that require clear layout awareness and separation of blocks. Nonetheless we found PDFMiner and PyPDF2 as good starting points.

Enhancing Your RAG System with Advanced PDF Parsing Solutions

As shown above, PDF parsers while extremely versatile and easy to apply, can sometimes struggle with complex document layouts, such as multi-column texts or embedded images, and may fail to accurately extract information. One effective solution to these challenges is using Optical Character Recognition (OCR) to process scanned documents or PDFs with intricate structures. Nanonets, a leading provider of AI-powered OCR solutions, offers advanced tools to enhance PDF parsing for RAG systems.

Nanonets leverages multiple PDF parsers as well as relies on AI and machine learning to efficiently extract structured data from complex PDFs, making it a powerful tool for enhancing RAG systems. It handles various document types, including scanned and multi-column PDFs, with high accuracy.

Chat with PDF

Chat with any PDF using our AI tool: Unlock valuable insights and get answers to your questions in real-time.

Benefits for RAG Applications

- Accuracy: Nanonets provides precise data extraction, crucial for reliable RAG outputs.

- Automation: It automates PDF parsing, reducing manual errors and speeding up data processing.

- Versatility: Supports a wide range of PDF types, ensuring consistent performance across different documents.

- Easy Integration: Nanonets integrates smoothly with existing RAG frameworks via APIs.

Nanonets effectively handles complex layouts, integrates OCR for scanned documents, and accurately extracts table data, ensuring that the parsed information is both reliable and ready for analysis.

Takeaways

In conclusion, selecting the most suitable PDF parser for your RAG system is vital to ensure accurate and reliable data extraction. Throughout this guide, we have reviewed various PDF parsers, highlighting their strengths and weaknesses, particularly in handling complex layouts such as multi-column formats and tables.

For effective RAG applications, it's essential to choose a parser that not only excels in text extraction accuracy but also preserves the original document's structure. This is crucial for maintaining the integrity of the extracted data, which directly impacts the performance of the RAG system.

Ultimately, the best choice of PDF parser will depend on the specific needs of your RAG application. Whether you prioritize accuracy, layout preservation, or integration ease, selecting a parser that aligns with your objectives will significantly improve the quality and reliability of your RAG outputs.